Don’t Panic

“The Hitchhiker’s Guide to AI Ethics is a must-read for anyone interested in the ethics of AI. The book is written in the style and spirit that has inspired many sci fi authors. The author’s goal was not only for a short, yet entertaining, but for an entire series.”

Sounds about right! Any guesses who wrote this raving review?

A machine learning algorithm. OpenAI’s GPT2 language model is trained to predict text. It’s huge, complex, takes months of training over tons of data on expensive computers; but once that’s done it’s easy to use. A prompt (“The Hitchhiker’s Guide to AI Ethics is a”) and a little curation is all it took to generate my raving review using a smaller version of GPT2.

The text has some obvious errors but it is a window into the future. If AI can generate human-like output can it also make human-like decisions? Spoiler alert: yes it can, it already is. But is *human-like* good enough? What happens to TRUST in a world where machines generate human-like output and make human-like decisions? Can I trust an autonomous vehicle to have seen me? Can I trust the algorithm processing my housing loan to be fair? Can we trust the AI in the ER enough to make life and death decisions for us? As technologists we must flip this around and ask: How can we make algorithmic systems trustworthy? Enter Ethics. To understand more we need some definitions, a framework, and lots of examples. Let’s go!

Building the Compass

To start exploring the ethics of AI, let us first briefly visit ethics in the context of any software-centric technology. The ethics of other technologies (such as biotechnology) is equally crucial but outside the scope of this primer.

In other words, the “rules” or “decision paths” that help determine what is good or right. Given this one may say that ethics of tech is simply the set of “rules” or “decision paths” used to determine its “behavior”. After all, isn’t technology always intended for good or right outcomes?

Software products, when designed and tested well, do arrive at predictable outputs for predictable inputs via such a set of rules or decision paths. Typically captured in some design document as a sequence diagram or user story. But how does the team determine what is a good or right outcome, and for whom? Is it universally good or only for some? Is it good under certain contexts and not in others? Is it good against some yardsticks but not so good for others? These discussions, the questions and the answers *chosen* by the team, are critical! Therein lies ethics. In the very process of creating technology it loses its neutral standing. It no longer remains just another means to an end but becomes the living embodiment of the opinions, awareness and ethical resolve of its creators. Thus ethics of a technology (or product) starts with the ethics of its creation, and its creators.

Finding “True North” for AI

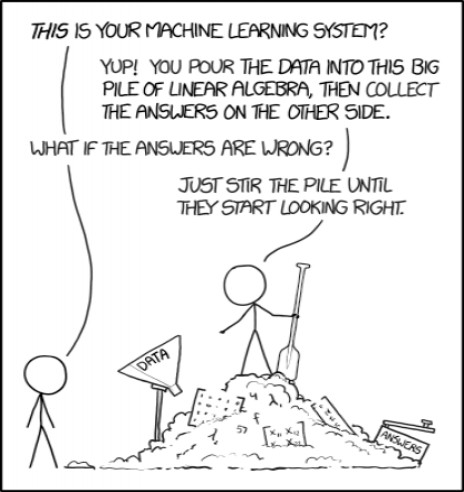

So far, we understand ethics, we understand why technology is not neutral, and we vaguely understand this has implications. But how do we apply this to AI, wherein the path from inputs to outputs is neither visible nor obvious; where the output is not a certainty but merely a prediction? In AI, specifically machine learning (a subset of AI that is currently realistically implementable at scale), missing data, missed inputs, missed decision paths, missed discussions all have a bearing on the “quality” of the prediction. Choosing to use the prediction irrespective of its “quality” has a bearing on the “quality” of the end outcomes. And the “quality” of end outcomes has a bearing on the “quality” of its impact on humans. Where “quality” implies ethical quality, not zero-defect software. In other words, the ethics of AI lies in the ethical quality of its prediction, the ethical quality of the end outcomes drawn out of that and the ethical quality of the impact it has on humans.

Ethical quality can be understood in context of this definition. What are the moral obligations of an AI and its creators? How well does it declare its moral obligations and duties? How well does it satisfy its moral obligations and duties towards other humans?

I like to ask how right, how fair and how just, is an algorithmic systems’ output, outcome and impact? How well can, and how well does, it identify and declare the contexts it is right, fair and just in, as well as the contexts in which it is not. Can it control how and where it is used? What are the implications of losing control of this context? Being answerable to these constitute the moral obligations and duties of developers of AI.

Why does this matter?

As cliched as this sounds now, AI has potential to “change the world”. Given the dizzying pace of AI mainstreaming, thanks to accessible compute power and open source machine learning libraries, it is easy to see that this change is going to be rapid and at scale. Anything built for speed and scale requires critical examination of its impact, for unintended harms will also occur at scale. Add to this the unique ways in which AI has the potential to cause “harm”, the urgency is real. To that, add the increasing ease with which individuals (consider: non-state malicious actors) can deploy state-of-the-art machine learning systems at scale, the urgency hits you.

So what are the “harms” that AI can cause, and what is the impact of those harms at scale? Kate Crawford, in her 2017 NeurIPS talk, provides the structure to think about this. To summarise:

A “harm” is caused when a prediction or end outcome negatively impacts an individual’s ability to establish their rightful personhood (harms of representation), leading to or independently impacting their ability to access resources (harms of allocation).

An individual’s personhood is their identity. In other words, incorrectly representing or failing to represent an individual’s identity in a machine learning system is a harm. Any decision made by this system thereafter, in regards to that individual, is a harm as well. Extending her definition of harms of representation to all involved, I think failing to accurately represent the system before the individual is also a harm. The ethical obligations placed upon a technology and its creators demand that they work towards mitigating all such harms. Hence the need for ethics of AI.

Applying ethical considerations to our individual or personal situations is natural to us. We are quick to spot instances when we were unfairly treated, for example! But applying this for others, in larger, more complex contexts when we are two steps removed from them, is extremely hard. I wager it can’t be done. Not alone, not by one individual. But as a group, we must.

Mapping the landscape

Spread across the two definitions of ethics is the landscape of issues collectively referred to as “Ethics of AI”. Issues that point to either the ethical quality of the predictions or of end outcomes or their direct and indirect impact to humans. I summarise them in categories like so:

* In the realm of “what AI is” (i.e. datasets, models and predictions):1. Bias and Fairness

2. Accountability and Remediability

3. Transparency, Interpretability and Explainability

* In the realm of “what AI does” are issues of:

1. Safety

2. Human-AI interaction

3. Cyber-security and Malicious Use

4. Privacy, Control and Agency (or lack thereof, i.e. Surveillance)

* In the realm of “what AI impacts” are issues related to:

1. Automation, Job loss, Labor trends

2. Impact to Democracy and Civil rights

3. Human-Human interaction

* In the realm of “what AI can be” are issues related to threats from human-like cognitive abilities and concerns around singularity, control going all the way up to debates around robot rights (akin to human rights). For this blog, I will steer clear of this realm altogether.

What AI is

While some would like to believe that sentient AI is fast approaching, or even surpassing human intelligence and gaining ability to move from knowledge to wisdom, for the most part it isn’t. Given this, assume that when I say “AI” I’m referring to a machine learning system consisting of a learner (model) that, given a set of inputs (data), is able to learn *something* and use that learning to *infer* something else (predictions). (Note: in Statistics “inference” and “prediction” are two very different things; but the machine learning community uses them interchangeably, or in some cases uses prediction for training data and inference for test or new data.) To summarise, AI is data, model and predictions. And ethics issues stemming from issues within the data, the models or the predictions fall in the realm of “ethics of what AI is”.

A lot of the ethics research, news and conversations in AI is around BIAS. Rightfully so, for a failure to identify or correct for such biases leads to unfair outcomes. It is also something AI researchers believe can be quantified and fixed in code. So what is bias, where does it stem from, and how does it creep in to a machine’s intelligence? How does it impact fairness of outcomes? Given there is bias and algorithms are a black box, how can we hold them accountable? What efforts are underway to open up the black box and make algorithmic systems transparent and explainable? Coming soon in part 2!

The applications built using machine learning mechanisms take the technology-human interaction to a whole new level. Autonomous robots, ie robots that operate without requiring real-time or frequent instructions from a human (e.g. automobiles, drones, vacuum cleaners, twitter bots etc.) impact and change our operating environments. Indirectly they change our behavior. Humans adapt. One might say they can adapt to these new operating environments too. For example, when cars were introduced humans adapted to new “rules” of engagement on the roads. However, with cars also came speed limits, road signs, traffic laws, safety regulations and other checks and balances to ensure safe and smooth technology-human interaction. As AI applications flood our world, researchers are recognising an urgent need for evaluation along the lines of impact to human safety, human perception of and interaction with autonomous robots, impact to our privacy, dignity and sense of agency. We’ll explore more in Part 3.

What AI impacts

Technology products often trigger second and third order consequences that are not always obvious at first. This is especially so when products outgrow their original intent and audience and reach a scale where a one-size-fits-all model fails miserably. We are seeing this happen with Social Media impacting democracies around the world as companies like Facebook, Google and Twitter struggle to rein in their algorithms and the at-scale gaming of their platforms. With AI, the second and third order consequences are not restricted to the applications or platforms alone, but also stretch beyond. In part 3, I will explore how AI is impacting jobs and in many cases worsening inequalities in access to opportunity and income. I will also explore how it may impact human-to-human and human-to-society interactions as well. How, as the lines between real-person and automated-bot start to blur online, as humans are bombarded with “information”, often fake, at scale, the very notion of trust and therefore safety is shaken. Stay tuned for Part 3.